California Management Review

California Management Review is a premier professional management journal for practitioners published at UC Berkeley Haas School of Business.

by Sreevatsa Bellary and Gaurav Marathe

Image Credit | NiNalin Stock

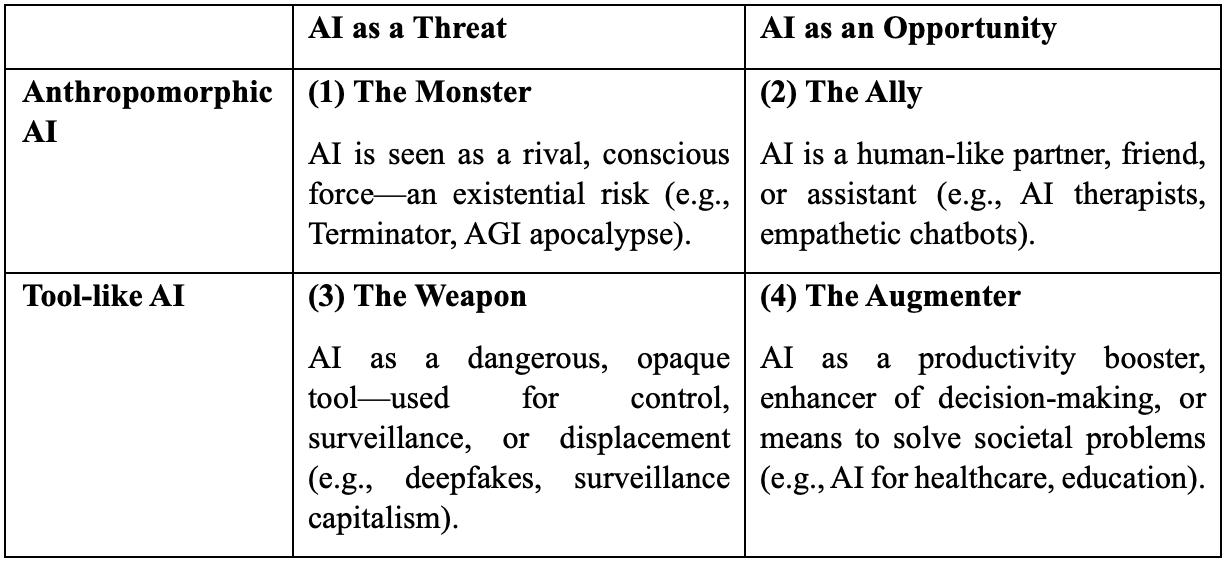

As Artificial Intelligence (AI) becomes integral to business, governance, and everyday decision-making, leaders face a growing paradox: the systems shaping our future are increasingly complex, yet the frameworks used to understand them remain narrative-driven. This article examines how dominant AI narratives act as cognitive scaffolds—simplifying complexity, enabling judgment, and guiding action in environments of low technical literacy. We introduce a 2×2 typology of AI narratives based on two dimensions: whether AI is perceived as a threat or opportunity and whether it is framed as a tool or an anthropomorphic agent. This yields four strategic frames—Augmenter, Ally, Weapon, and Monster—each carrying distinct implications for trust, policy, ethics, organizational learning, and governance. For executives and policymakers, understanding these narratives is not optional—it is foundational to making sound decisions in an AI-mediated world. How we frame AI may matter as much as how we design it.

Michael Haenlein and Andreas Kaplan, “A Brief History of Artificial Intelligence: On the Past, Present, and Future of Artificial Intelligence,” California Management Review, 61/4 (2019): 5-14.

Konstantin Hopf, Oliver Müller, Arisa Shollo, and Tiemo Thiess, “Organizational Implementation of AI: Craft and Mechanical Work,” California Management Review, 66/1 (2023): 23-47.

Artificial Intelligence (AI) is no longer a back-office innovation—it is now a core driver of strategic decision-making across industries1. From streamlining operations in finance and healthcare to transforming talent acquisition, customer engagement, and risk management, AI is deeply embedded in the infrastructure of modern business2. Algorithms are increasingly influencing critical decisions and shaping markets, institutions, and competitive advantage.

Yet, a paradox defines this moment: while AI’s impact grows, most decision-makers engage with it through narrative rather than technical comprehension. Popular discourse on AI is dominated by metaphors—“black boxes,” “thinking machines,” “predictive oracles”—that serve more as meaning-making tools than precise explanations3. These narratives are not trivial. They function as cognitive scaffolding, enabling non-experts to interpret, judge, and act on complex systems despite deep epistemic gaps. In the absence of technical literacy, these scaffolds become the architecture for public understanding and policy-making.

To analyze how these narratives shape societal responses to AI, we propose a 2×2 typology structured along two axes: (1) whether AI is framed as a threat or opportunity, and (2) whether it is understood as a tool or as a human-like agent. This framework yields four dominant narrative types—Augmenter, Ally, Weapon, and Monster. We outline the defining features of each, illustrate them with and examine their implications across critical dimensions including policy orientation, ethical framing, public trust, learning behaviors, and institutional readiness.

This paper contributes to the growing literature on sociotechnical imaginaries4,5 by emphasizing that how we talk about AI is not secondary to how we build it—it is constitutive. Narratives do not merely reflect attitudes; they shape attitudes, structure cognition, anchor expectations, and guide institutional design6. Active engagement with these scaffolds is essential. Left unchecked, they can lead to blind techno-optimism or paralyzing fear. If AI is to be governed wisely, we must interrogate and educate the stories we use to make sense of it.

To create a reflective synthesis of diverse narratives of AI, we propose a typology based on two foundational dimensions:

Perceived Orientation: Is AI framed primarily as a threat or an opportunity?

Ontological Framing: Is AI imagined as a tool-like system, or as an anthropomorphic agent with human-like capabilities?

The two dimensions emerged from narrative tone (optimistic vs. anxious) and narrative locus (human-centric vs. machine-centric). These dimensions serve as intuitive cognitive anchors, allowing us to make sense of a sprawling and often contradictory narrative landscape. The tone dimension captures the emotional valence and moral orientation of narratives, whereas the locus dimension surfaces questions of agency and value—whether humans or machines are at the center of the imagined future. We observed that public and organizational narratives tend to pivot on two questions:

Is AI in service of human ends, or evolving intentions of its own?

And should we be hopeful or cautious about its trajectory?

Crossing these axes yields four dominant narrative types—each serving as a cognitive scaffold that shapes strategy, governance, and learning behavior in organizations.

Framing: AI is seen as a semi-autonomous force—capable of slipping out of human control and altering the course of civilization.

Typical usage: Advanced language models, speculative general intelligence, self-improving agents.

Example: Public calls for AI moratoriums after a leading lab’s model exhibits unpredictable behavior—sparking fears of runaway systems.

Organizational mindset: Crisis planning, precautionary regulation, existential risk framing.

Framing: AI is imagined as a sentient co-worker—capable of learning, adapting, even empathizing.

Typical usage: AI as therapists, co-pilots in software, clinical assistants in medicine, humanized chatbots.

Example: A hospital deploys an empathetic AI assistant that helps patients navigate treatments, communicating with warmth and memory of prior interactions.

Organizational mindset: Foster human–AI collaboration, experiment with hybrid workflows, trust-building.

Framing: AI is a tool, but its power can be co-opted for surveillance, manipulation, or warfare.

Typical usage: Deepfakes, algorithmic bias in hiring or policing, military drones.

Example: A social platform faces backlash when its recommendation algorithm radicalizes users—raising concerns about profit-driven AI misuse.

Organizational mindset: Legal compliance, ethics boards, defensive governance.

Framing: AI is seen as a neutral, controllable tool that enhances efficiency, precision, and scale.

Typical usage: Process automation, predictive analytics, enterprise decision support.

Example: A retail chain uses AI to optimize inventory, reducing waste and improving margins. AI is a “smarter spreadsheet”—powerful but subordinate.

Organizational mindset: Upskill workforce, adopt AI-as-service tools, focus on ROI.

This typology offers more than classification. Each narrative acts as a strategic lens, influencing how institutions allocate resources, craft policy, and communicate internally and externally.

The four AI narrative types—Augmenter, Ally, Weapon, and Monster—each carry distinct cognitive models that shape how organizations behave across critical strategic dimensions. These narratives not only inform how AI is framed in public discourse but also embed themselves into policies, learning systems, ethical reasoning, and institutional structures. Below, we outline how each typology influences these dimensions in practice.

The Augmenter narrative supports a pro-innovation stance with minimal regulatory interference. AI is viewed as a productivity enhancer—another wave of automation—leading to policies that favor experimentation and adoption over precaution. By contrast, the Ally narrative encourages co-development and responsible innovation through collaborative frameworks that integrate diverse stakeholder voices, including employees, users, and communities.

The Weapon narrative triggers defensive regulatory moves, focusing on mitigating misuse, surveillance risks, or adversarial deployment of AI. Regulation here is less about enabling innovation and more about control. Meanwhile, the Monster narrative invokes existential threats and systemic collapse scenarios (e.g., runaway superintelligence), pushing institutions toward drastic containment policies, moratoriums, or even anti-AI postures.

Narratives strongly influence the level and quality of public trust. The Augmenter frame fosters utilitarian trust: people use AI for convenience and efficiency but remain emotionally disengaged. Ally narratives, however, humanize AI—invoking companionship, empathy, and care—which can create deep, even over-extended trust relationships.

In contrast, Weapon narratives reduce trust by amplifying fears of surveillance, manipulation, or job loss. The Monster narrative breeds polarized perceptions: while some see AI as a savior, others view it as an existential threat—leading to volatility in public sentiment and inconsistent policy pressures.

Each narrative scaffolds different learning imperatives. The Augmenter model promotes task-specific training and upskilling—focused on tools, platforms, and productivity. The Ally frame emphasizes relational learning, where users experiment with AI and adaptively co-learn, sometimes forming emotional bonds with the technology.

The Weapon narrative encourages defensive learning, such as ethics-by-design frameworks, regulatory literacy, and adversarial thinking. The Monster frame, however, may suppress learning altogether—producing avoidance, resignation, or a sense that nothing can be done without fundamental breakthroughs in AI safety.

In the Augmenter view, ethics are localized—focused on user responsibility and proper implementation. The Ally narrative expands this to a mutual accountability model: AI and humans co-evolve and share moral agency. Weapon narratives externalize ethics, requiring formal regulation, institutional oversight, and collective safeguards.

The Monster narrative invokes a planetary scale of ethics—where the stakes are existential and discussions center on AI alignment, value-lock-in, or the moral legitimacy of creating potentially uncontrollable agents.

Governance approaches mirror these frames. Augmenter institutions rely on tech-driven governance—led by innovation units and product teams. Ally narratives push toward participatory governance, where ethics boards, interdisciplinary teams, and public engagement become central. Weapon narratives lead to centralized regulatory bodies, audit mechanisms, and surveillance safeguards. Monster narratives often invoke crisis-mode governance: reactive, ad hoc, and prone to drastic interventions that lag behind technological developments.

The Augmenter story encourages iterative, incremental adaptation, usually through pilot projects. The Ally frame supports experimental, flexible cultures with built-in feedback loops. Weapon narratives result in protocol-heavy, compliance-first institutions that resist rapid change. Monster narratives frequently induce organizational paralysis or sporadic over-corrections based on perceived catastrophic risk.

Narratives also shape individual mindsets. In the Augmenter context, employees see AI as a skill challenge—something to learn and master. Under the Ally model, users feel like collaborators, often imbuing AI with human-like traits and emotional significance. The Weapon frame breeds caution and compliance, encouraging users to stay within strict boundaries. The Monster narrative can result in disengagement, cynicism, or even resistance to using AI tools altogether.

Finally, different narratives guide strategic readiness. Augmenter narratives prioritize workforce reskilling and digital transformation. Ally frames lead to cross-functional capacity building and interdisciplinary fluency. Weapon narratives emphasize legal preparedness, compliance frameworks, and ethical review mechanisms. The Monster narrative demands scenario planning, red-teaming, and crisis simulations—reflecting a world where the next move could be existential.

This discussion highlights a critical yet often overlooked element in the AI discourse: the narratives that mediate understanding, shape strategy, and guide action in contexts of uncertainty. These narrative types are not just rhetorical devices—they act as cognitive scaffolds through which non-experts interpret complex technologies, form judgments, and make decisions.

For organizations, this has three major implications:

Most organizational stakeholders—executives, managers, employees, and regulators—do not engage with AI at the code or algorithmic level. They encounter it through metaphors, headlines, policies, product pitches, and social anxieties. Narratives thus become the de facto epistemology of AI in organizations. Without critical awareness of these frames, leaders risk being led by them—drifting into either naive techno-optimism (“AI will fix everything”) or paralyzing fear (“AI will destroy us”).

By cultivating narrative literacy—understanding the typologies, recognizing their triggers, and unpacking their assumptions—organizations can reclaim agency in how AI is interpreted and deployed.

Organizations must go beyond technical upskilling to include narrative education as part of AI readiness. This means training teams to:

Such reflexivity creates a more grounded and deliberate AI culture—one that can accommodate uncertainty without defaulting to hype or hysteria.

Narratives do not merely describe the world—they help shape it. Strategic alignment between an organization’s narrative framing and its operational approach to AI is crucial. For example:

In shaping AI’s future, how we talk about it may be just as important as how we build it. Narratives are not mere sidebars to technical progress—they are organizing forces that channel attention, shape norms, and govern action. As AI capabilities evolve, organizations must become intentional stewards of the stories they inhabit and project.

By engaging critically with AI narratives—identifying them, educating around them, and strategically aligning with or against them—leaders can better navigate the socio-technical terrain ahead. The future of AI will not be written by engineers alone; it will be co-authored by the narratives we choose to believe, amplify, and enact.