California Management Review

California Management Review is a premier academic management journal published at UC Berkeley

by Luca Collina, Mostafa Sayyadi, and Michael Provitera

Image Credit | Possessed Photography

The article explores how to assign accountability when artificial intelligence systems are involved in decision-making. As A.I. becomes more widespread, who should be held responsible if these systems make poor choices is unclear. The traditional top-down accountability model from executives to managers faces challenges with A.I.’s black box nature. Approaches such as holding developers or users liable have limitations as well. The authors argue that shared accountability across multiple stakeholders may be optimal but supported by testing, oversight committees, guidelines, regulations, and explainable A.I. Concrete finance, customer service, and surveillance examples illustrate A.I. accountability issues. The paper summarizes perspectives from academia and business practice on executives’ and boards’ roles, including mandating audits and transparency. It concludes that while A.I. accountability models remain debated, decision-makers must take responsibility for the technologies deployed. The article suggests combining prescriptive accountability rules and data quality evaluation frameworks can optimize resources to enhance AI-assisted decision-making, align regulatory requirements, respect stakeholders, and exploit competitive advantage using advanced technology.

“Artificial Intelligence in Human Resources Management: Challenges and a Path Forward,” by Prasanna Tambe, Peter Cappelli, & Valery Yakubovich. (Vol. 61/4) 2019.

“Assessing the Nestlé Boycott: Corporate Accountability and Human Rights” by James E. Post. (Vol. 27/2) 1985.

Strategy implementation requires clear accountability in order to be successful. One decision-maker is ultimately accountable and liable for its outcomes, similarly for any strategic activities conducted. Traditional organizational structures assign responsibility from the top down; executives and managers hold themselves responsible for setting strategies and producing results, with accountability flowing downward from there.1 Unfortunately, with A.I. technologies rising, assigning accountability is now more complicated.2 Artificial Intelligence systems make decisions without human oversight; who bears the responsibility when an A.I. system makes poor choices that lead to negative consequences? This article has been designed and developed to answer this critical question.

Much emphasis has rightly been put on machine learning (ML) and artificial intelligence (A.I.) biases. The general justification was based on human bias, but what about the bias in the machine learning training data? However, it is time to avoid overlooking accountability for any damaging A.I. actions still being discussed and partially in progress with countries and economic areas about regulations.3

Financial world and National security: Let us think about controlling possible deceptive activities, making wrong what is right and missing what is wrong, against the law? And any threat to security? Who is to be considered accountable?

Health: What if diagnoses are wrong because of issues with ML and A.I.?

Transportation: Let us consider autonomous vehicles and whether the algorithmic application fails. Who is accountable for A.I. damages to stakeholders?

Banking and A.I.: Banks use A.I. chatbots for customer service. By checking transaction patterns, A.I. also spots spending that could be fraud, so anything weird stands out. This finds fraud better than people . If something goes wrong… due to biases, errors, or lack of transparency:

this diffuses accountability across black-box systems instead of people;

enables algorithmic harms to go unnoticed and unchallenged, and

reduces the ability to remedy issues or ensure ethical oversight as opaque algorithms replace human judgment and discretion in banking decisions.

The customers’ side could suffer blocked payments, locked accounts, or credit damage from improper flags. Biases could also lead to unfair denial of applications. The lack of transparency in banks’ A.I. systems means customers have no way to understand or contest detrimental algorithmic decisions affecting their finances.

Customer service: Chatbots for customer service can respond to common questions with automated answers. More advanced chatbots can find and present information from the websites when asked. Chatbots use NLP and constantly learn from conversations. If something goes wrong…?

No single employee can be blamed if the automated system fails. Customers have no recourse for issues slopped by a faulty chatbot.

Inaccurate information is pulled from websites, there is a lack of oversight, and no one is accountable for errors.

Biases in algorithms can lead chatbots to exclude certain groups or make inappropriate comments. Opaque algorithms make it hard to pinpoint where accountability lies.

Chatbots are unreliable in handling private data, putting customer privacy at risk.

Customers in these scenarios could not address inquiries, waste their time, feel discriminated against, and be subjected to privacy risks.

Marketing and A.I.: Marketing and A.I. Machine learning enable humans to rapidly process large datasets and find insights within them quickly, thus improving data-driven decisions. Marketing now leverages A.I. for consumer recommendations while automating repetitive tasks to save marketers time and effort.

Whenever something goes wrong with marketing A.I., its failure can spread accountability across its black box algorithm - leaving poor decisions, biases, and harms unchallenged by customers or marketers alike. Automating repetitive marketing tasks reduces opportunities to check quality while creating accountability issues - leading customers to experience decreased transparency and agency. Customers lack many legal recourses when faced with unethical, illegal, or harmful A.I. marketing tactics that cause financial damages through misguided campaigns.

Surveillance and security: Surveillance and security cameras now use A.I. to detect potential threats. By analyzing video feeds, this cutting-edge surveillance solution learns to recognize problems such as intruders, unauthorized access, or odd behaviour. Although A.I. surveillance still has some limits, its performance continues to evolve rapidly.

If something goes wrong…? Inaccurate A.I. threat detection and image recognition algorithms could lead to discriminatory surveillance and over-policing of marginalized groups. In contrast, over-reliance on automated surveillance provides a false sense of security.

When combined, the accountability problem arises due to limited recourse or measures available and ineffective human monitoring practices; diluting accountability provides temporary relief while covering up responsibility associated with inadequate human monitoring practices.

The impact on humans is heavy in these situations: ineffective A.I. surveillance imposes significant strains on people through privacy invasions, unfair targeting, unchecked abuses of power, and erosion of civil liberties with limited ways of redress.

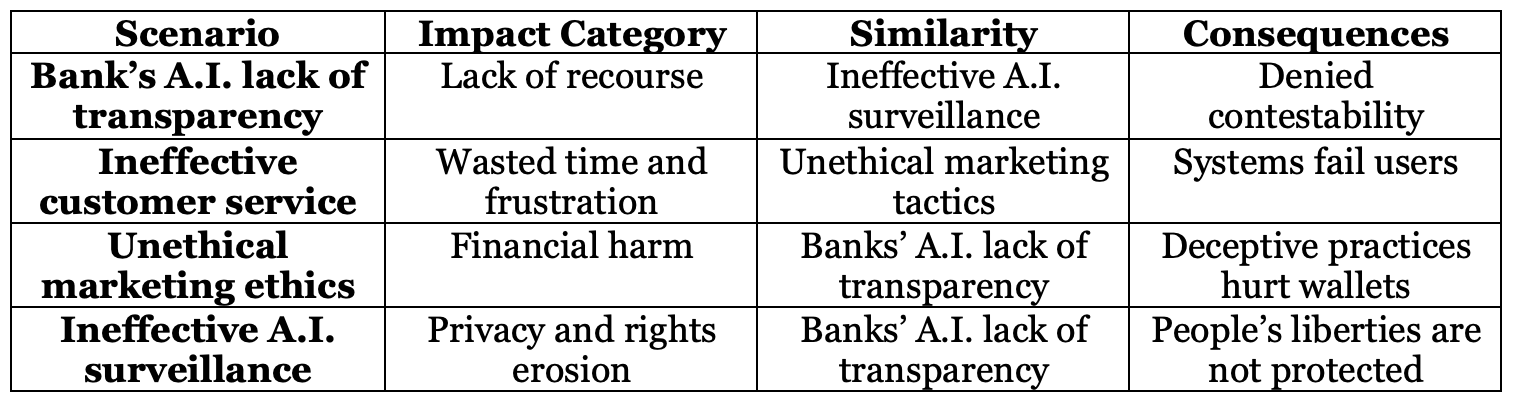

Table 1 - A synopsis of crossing reference impacts and scenarios

There are various methods we could employ when it comes to assigning accountability when A.I. is involved. One holds its creators liable4; however, this may be impractical when multiple parties contribute over time. Furthermore, many developers strive to build impartial tools that may make predicting their behaviour in various real-life settings impossible.

Another approach is to hold users accountable. For example, if a bank manager relies on an A.I. lending tool that unfairly discriminates against certain loan applicants, this manager could be liable for not properly overseeing its decisions.5 Of course, this assumes they fully comprehend how its outputs come about.

Due to these challenges, some experts argue that A.I. system accountability should be shared among various stakeholders.6 Developers, users, and business leaders who deploy A.I. systems could all play a part in upholding it; shared accountability better reflects A.I. risks and rewards while potentially diluting responsibilities if each accountable party’s boundaries remain unclear.

Assuring A.I. systems operate responsibly is critical, so let’s look at ways they can do just that. First, testing A.I. systems to understand their decision-making processes and keeping detailed records of their activities are imperative; keeping and reviewing records, like keeping student homework, can provide much-needed evidence if anything goes awry or something seems unclear - for this process to work those in charge must commit time, energy and money in it as part of the solution process.

Next, we can envision groups or committees scrutinizing how A.I. systems are utilized, with particular care given when dealing with significant impacts on people’s lives, such as A.I. Such bodies could set rules, monitor how the A.I. performs as expected, and intervene if there is an issue. This is similar to having school boards that make rules and ensure compliance. It would serve similar functions.

Before using an A.I. system, there should be an established process to make sure it satisfies certain standards - like passing a car safety inspection test before being driven on public streets. Establishing these guidelines ensures that everyone involved knows precisely what is permissible or prohibited in terms of A.I. use and A.I. security issues. This way, all involved know exactly where things stand concerning regulations or what must be addressed further.

There is also the law. Just as there are regulations to guide businesses or cars, A.I. also has rules. Banks or hospitals, for example, often face severe ramifications from any mistakes in A.I. systems7, prompting businesses to create safe A.I.

Companies themselves can also take steps to ensure A.I. is used ethically. One idea would be establishing a Chief AI Ethics Officer tasked with overseeing that use. Such individuals would ensure that the company adheres to rules and does the right thing.

We must understand how A.I. makes decisions. If something goes wrong, we must know why. Knowing the reason can help solve problems or prevent them in the future.

In addition to the regulations under discussion or approval, several practice and association cases aim to give guidelines for Assurance through guidelines and case studies. The list provided by the Centre for Data Ethics and Innovation is not exhaustive, but it is a meaningful resource.

A concept and methodology related to giving clarity to the black box of ML is Explainable A.I. (XAI). Explainable A.I. helps executives and managers understand why it makes specific suggestions. Rather than just giving advice, it also provides clear explanations for its reasoning. This transparency builds more awareness and trust since people can see how the A.I. made its recommendations.

The role of Boards as responsible for ensuring accountability for respecting ethical and safety potential issues emerging from the algorithms is discussed by the business practices. Gregory (2022) reminds us that the Board of Directors oversees company operations and direction and is accountable for supervising management’s use of delegated authority.8 The Board cannot simply hand over power. Furthermore, this is a stressed aspect of A.I.

Another contribution to the cause comes from Silverman (2020).9 She proposes that Boards of Directors take an active approach to overseeing artificial intelligence technology as its use increases over time. With so much A.I. deployed, Boards ensure it aligns with company missions and risks and devise a detailed plan for effectively overseeing A.I. deployment and implementation.

Recent years have witnessed artificial intelligence systems become more capable and widespread in their applications, yet concerns have also surfaced in academia regarding accountability and ethics when using A.I. systems. Boards are fundamental in ensuring companies develop and deploy A.I. responsibly.

Boards should mandate testing procedures to minimize harm and identify potential biases or failures before deploying systems.10 Regular algorithmic auditing processes should also be implemented.

In addition, boards must demand transparency and traceability around A.I. systems through documentation about an A.I. system’s development, training data, capabilities, and limitations to enable accountability. Any high-risk systems should also have human-in-the-loop oversight measures. Boards should regularly assess A.I. systems for discriminatory effects or rights violations.

Last but not least, boards must remain informed on A.I. governance developments. Staying abreast of new regulations, industry standards, and best practices helps boards stay ahead of the game. Adopting mechanisms such as IEEE Ethically Align Design standards is a way boards can demonstrate their commitment to responsible A.I.11

There remains some disagreement as to whether executives can reasonably be held liable for A.I. systems they do not fully comprehend while making end users accountable is in line with human accountability; however, executives may lack enough technical know-how in auditing AI-assisted decisions. Others suggest that executives must assume full responsibility for any technologies they implement, even if details remain vague.5

Shared accountability models could offer one way out: spreading responsibility across developers, users, and business leaders; however, these models could backfire by diluting individual responsibility if divisions remain unclear. Finding an equitable balance requires new corporate policies and governance structures and looking out for regulations across various countries that might determine responsibility issues.

Implementing A.I. strategies still relies upon one accountable decision-maker. External oversight boards could aid with monitoring A.I. risks and arbitrating accountability questions; however, their creation often meets with industry resistance. Whoever is charged should use any available tools but take responsibility for any A.I. systems deployed despite remaining unclear about the specific details involved. Various strategies are available for A.I. systems that work effectively and responsibly while supporting and promoting transparency.

A.I. accountability will require new ways of tracking decisions across human and machine components. Executives cannot abdicate responsibility when using artificial intelligence systems despite inherent uncertainties associated with technology. The fact that academia and practice share common views and pillars could simplify the practical approach to managing A.I. accountability from the top down.

To make A.I. accountability more concrete, we propose two easily combined frameworks. The first one is a simple four-step framework that organizations can follow when deploying A.I. systems with a procedural approach:

A. Impact Assessment: Before implementation, conduct an assessment of the potential impacts—both beneficial and harmful—that the A.I. system could have on individuals and groups. This allows preemptively identifying any areas of concern.12

B. Risk Monitoring: Once the system is in use, monitor metrics that track risks around fairness, security, safety, transparency, and other dimensions. Regular algorithmic auditing can detect any emerging issues. [10]

C. Incident Response: If any incidents occur where the A.I. system causes harm, have a plan in place to investigate, document, and remediate the situation. Loop in legal/compliance teams and external oversight bodies as needed.

D. Accountability Mapping: Create a map clarifying the responsibilities of different stakeholders - developers, business leaders, and users - related to the A.I. system’s outcomes. Update policies and processes to match the map.

This framework provides organizations with a starting point to operationalize A.I. accountability. It helps align incentives around responsible A.I. development, deployment, and monitoring. Developers gain a process for building safer systems, while business leaders have steps to oversee A.I. risks proactively. Mapping accountability enables appropriate assignment of liability if issues emerge. Overall, this structured approach can lead to A.I. systems that benefit society responsibly.

We want to suggest an alternative approach that promotes accountability through oversight and transparency to make accountability more tangible:

Establish Ethics Boards: Appoint cross-functional ethics boards to review A.I. systems pre- and post-deployment for risks related to fairness, bias, safety and any other related concerns that might affect them. They can set standards and intervene if any issues arise.13

Implement Algorithmic Audits: Undertake regular third-party audits of datasets, algorithms, and outputs to detect potential inaccuracies, biases, and harms that might exist within them. Auditors should possess expertise in statistics, ethics, and law.14

Enforce Explainability: A.I. systems must be transparent about their purpose, data sources, capabilities, and limitations in order to be explained. An explainable A.I. method may illuminate how outputs are created.

Engage Impacted Groups: Involve representatives of those impacted by an A.I. system in its design, development, auditing, and oversight processes for maximum value. Their real-world perspectives can provide invaluable input.

This oversight and transparency framework strikes a balance between innovation and responsibility. Ethics boards and auditors incentivize accountability at every stage of the A.I. lifecycle. Explainability and community participation foster trust within society - creating ethical A.I. that respects diverse stakeholder needs.

Both AI accountability frameworks approach it from different vantage points: one is procedural and supports internal implementation through steps such as pre-deployment risk assessments, ongoing monitoring, incident response plans, and emergency plans, while the second focuses on external accountability by installing ethics boards, mandating audits, explaining explainability requirements and including community participation into its oversight measures. It can demonstrate thought leadership in operationalizing accountable A.I.15

This conceptual innovation provides business leaders with tailored A.I. adoption solutions explicitly designed to their priorities. Each framework offers distinct strengths that businesses can harness when adopting this emerging technology responsibly while remaining flexible enough for customized implementation and incorporation into long-term policies and regulations that will arise over time.

Artificial Intelligence accountability models remain contentious, but executives must assume responsibility for the technologies deployed. Shared accountability among developers, users, and companies shows promise if governance policies align incentives appropriately; testing auditing explainability and oversight can bolster accountability despite A.I.’s uncertainties; organizations should balance innovation with responsibility to adopt capabilities that respect stakeholders; collective responsibilities across society is necessary to promote A.I.’s potential; therefore an approach which supports transparency and oversight is needed when adopting A.I. capabilities within organizations. There is also the opportunity to combine prescriptive management rules with data quality management to facilitate strategic decision-making processes. This approach would decrease workloads and optimize investments while at the same time protecting stakeholders’ interests and realizing A.I.’s strategic use with assured data quality.

Mittelstadt, B., Allo, P., Taddeo, M., Wachter, S. and Floridi, L., 2016. The ethics of algorithms: Mapping the debate. Big Data & Society, 3(2), p.2053951716679679.

Tóth, Z., Caruana, R., Gruber, T. & Loebbecke, C. (2022). The Dawn of the A.I. Robots: Towards a New Framework of A.I. Robot Accountability. Journal of Business Ethics, 178, pp. 895–916.

Bansal, S., 2020. 15 Real World Applications of Artificial Intelligence. [Online] Available at: https://www.analytixlabs.co.in/blog/applications-of-artificial-intelligence/ [Accessed 31 8 2023].

Novelli, C., Taddeo, M. & Floridi, L. (2023). Accountability in artificial intelligence: what it is and how it works. A.I. & Society. https://doi.org/10.1007/s00146-023-01635-y

Burton, J.W., Stein, M. and Jensen, T.B., 2020. A systematic review of algorithm aversion in augmented decision making. Journal of Behavioral Decision Making, 33(2), pp.220-239.

Bryson, J. and Winfield, A., 2017. Standardizing ethical design for artificial intelligence and autonomous systems. Computer, 50(5), pp.116-119.

Abebe, R., Barocas, S., Kleinberg, J., Levy, K., Raghavan, M. and Robinson, D.G., 2022. Roles for computing in social change. In Proceedings of the 2022 ACM Conference on Fairness, Accountability, and Transparency (pp. 662-673).

Gregory, H., 2023. A.I. and the Role of the Board of Directors. [Online] Available at: https://www.reuters.com/practical-law-the-journal/transactional/ai-role-board-directors-2023-08-01/[Accessed 13 09 2023].

Silverman, K., 2020. Why your Board needs a plan for A.I. oversight. MIT Sloan Management Review, 62(1), pp.1-6.

Raji, I. D., Smart, A., White R. N., Mitchell, M., Gebru, T., Hutchinson, B., Smith-Loud, J., Theron, D., Barnes, P. 2020. Closing the A.I. accountability gap: Defining an end-to-end framework for internal algorithmic auditing. In FAT* 2020—Proceedings of the 2020 conference on fairness, accountability, and transparency (pp. 33–44).

IEEE Ethically Align Design standards is a way boards can demonstrate their commitment to responsible A.I. (IEEE, 2019).

Varshney, K.R., 2022. “Trustworthy machine learning and artificial intelligence”. XRDS: Crossroads, The ACM Magazine for Students, 29(2), pp.26-29.

Jobin, A., Ienca, M. and Vayena, E., 2019. The global landscape of A.I. ethics guidelines. Nature Machine Intelligence, 1(9), pp.389-399.

Sandvig, C., Hamilton, K., Karahalios, K. and Langbort, C., 2014, May. Auditing algorithms: Research methods for detecting discrimination on internet platforms. Data and discrimination: converting critical concerns into productive inquiry, pp.1-23.

Zhang, B., Dafoe, A., Evans, O., & Olhede, S. (2021). Leveraging Technical Advances to Improve A.I. Safety. Proceedings of the AAAI/ACM Conference on A.I., Ethics, and Society (pp. 330-337).