California Management Review

California Management Review is a premier professional management journal for practitioners published at UC Berkeley Haas School of Business.

Pitabas Mohanty, Supriti Mishra, and Tina Stephen

Image Credit | Odin AI

“…it is important that the board recognizes that AI does not only affect the business but also the board itself, i.e., the governance with AI”. – Michael Hilb1

Artificial intelligence (AI) is reshaping competitive landscapes across industries, altering how companies create value and maintain strategic advantage. Although many organizations use AI to automate workflows and develop personalized customer experiences, the role of boards in governing these AI-driven initiatives remains underdeveloped. According to a Deloitte survey, only 14% of boards regularly discuss AI2, while a Harvard Law School study finds that only 13% of S&P 500 companies have directors with AI expertise3. As per the above Deloitte survey, 45% of firms have yet to bring AI onto the board’s agenda at all. This disconnect reveals a critical governance gap: as AI transitions from a novel technology to a foundational element of corporate strategy, directors risk trailing behind.

Jürgen Brock, Florian Wangenheim, “Demystifying AI: What digital transformation leaders can teach you about realistic artificial intelligence,” California Management Review, 61/4 (2019): 110-134.

Rebecka C. Ångström, Michael Björn, Linus Dahlander, Magnus Mähring, and Martin W. Wallin,”Getting AI Implementation Right: Insights from a Global Survey,”California Management Review, 66/1 (2023): 5-22.

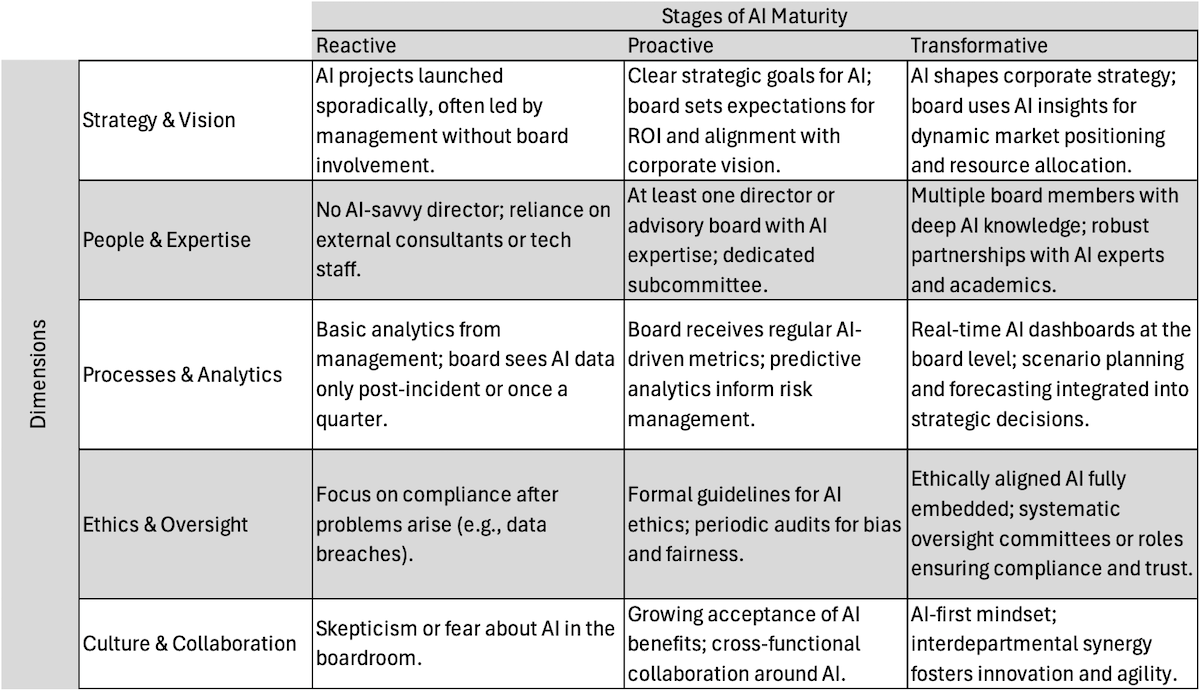

We propose an AI Governance Maturity Matrix to provide boards with a roadmap for progressively developing AI oversight capabilities. The matrix spans five key dimensions—Strategy & Vision, People & Expertise, Processes & Analytics, Ethics & Oversight, and Culture & Collaboration—each organized into three maturity stages: Reactive, Proactive, and Transformative. We believe that, by applying this matrix, boards can identify their current level of readiness, determine clear improvement targets, and systematically strengthen AI governance. In so doing, they will be able to close the gap between rapid technological innovations and the deliberate, responsible oversight needed to deploy AI for sustainable business advantage.

The five dimensions of the matrix evolve through three stages: Reactive, Proactive, and Transformative. Most boards will begin at a reactive stage, dealing with AI on an ad hoc basis. As they progress, they will adopt proactive measures, such as establishing committees or employing specific AI reporting protocols. Ultimately, boards at the transformative stage will fully integrate AI into strategic governance, ensuring that AI is not only operationally sound but also driving innovation and long-term value creation.

Table 1: AI Governance Maturity Index

Table 1 outlines how boards can advance from minimal awareness to thorough, systematic engagement with AI across all dimensions. We explain each dimension briefly here.

At a reactive stage, boards will learn about AI projects only when they produce either spectacular success or notable failure. By contrast, in the proactive stage, boards will begin setting targeted expectations for AI. They will require management to justify how AI initiatives align with overall business strategy, establish Key Performance Indicators (KPIs), and define ROI expectations. For example, the board of a retail firm using AI-based inventory management will demand explicit linkage to strategic goals, such as reducing stock shortages or improving supply chain resilience. Ultimately, at the transformative stage, AI will be inseparable from the company’s strategic vision. The board will regularly consult AI-driven forecasts, market analyses, and scenario planning to guide resource allocation and pivot swiftly in changing markets.

One of the most common hindrances to robust AI governance is the shortage of AI knowledge among board members. Without expertise on the board, oversight tends to be cursory, with directors deferring to management for complex AI decisions. In the reactive stage, boards will engage external consultants when crises arise, or they rely on internal reports that might lack critical depth. In the proactive stage, boards will take deliberate steps to enhance their collective competence, such as recruiting members with AI backgrounds or establishing a technology subcommittee. Transformative boards will go beyond simple recruitment. They sponsor ongoing AI education for all directors, form standing committees dedicated to technology governance, and build relationships with universities or think tanks. This will ensure that directors remain conversant with emerging AI trends, regulatory shifts, and ethical frameworks.

Even with strategic clarity and strong expertise, boards need processes and analytics that bring AI insights into governance. At the reactive stage, boards may receive updates sporadically, often after issues surface. AI projects might run in organizational silos, with minimal feedback loops to the board. Proactive boards will, however, implement structures for ongoing reporting. They mandate periodic AI performance reviews, real-time dashboards for risk detection (e.g., cybersecurity or credit defaults in a financial institution), and use predictive analytics to anticipate market shifts. This step dramatically reduces the blind spots that hamper timely decision-making.

When boards treat AI ethics as peripheral or only react when controversies erupt, they remain stuck in the reactive stage. A more proactive stance involves setting clear ethical guidelines, adopting recognized frameworks, and periodically auditing AI models for fairness. IBM’s Fairness 360 toolkit is an example of how systematic checks can mitigate biases and maintain stakeholder trust[4]. Boards may require management to document how AI decisions are made, which data sets are used, and whether these data sets are subject to potential biases.

In the transformative stage, boards will integrate ethical considerations into every facet of AI deployment. They might form an AI Ethics Committee empowered to veto or modify high-risk projects. Ethical oversight merges with strategic planning, ensuring the board balances innovation with societal responsibilities. This approach not only safeguards stakeholder interests but can also cultivate a more stable environment for AI-driven growth.

Even the most robust processes can be undermined by a board or management culture that resists AI integration. In a reactive environment, AI might be seen as a threat to established routines. Directors may question the reliability of AI, underestimating its potential or ignoring the data signals it provides. Proactive boards will encourage collaboration between technology teams, executive management, and directors. They might sponsor cross-functional AI task forces or hold joint training sessions for senior leadership and board members, breaking down silos and fostering a unified approach to technology adoption. Transformative governance will emerge when AI insights naturally inform everyday decision-making.

Though the matrix categorizes maturity in three distinct stages, we believe that the real-world governance transitions will be incremental and iterative. A board might be proactive in some dimensions—such as People & Expertise—while still reactive in others—like Culture & Collaboration. Achieving a uniform, transformative state will require coordinated advances across all five dimensions.

Implementing the AI Governance Maturity Matrix is not a simple checkbox exercise. Boards must actively steer progress, often by undertaking:

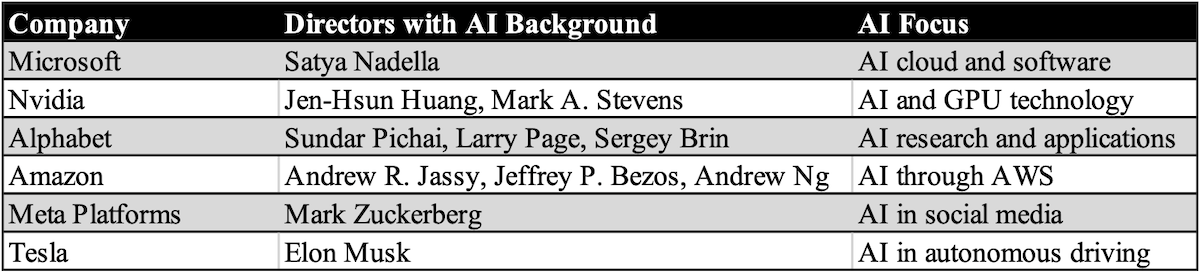

Where do the companies stand in the above matrix? A quick survey of the top 50 companies in the U.S. (in terms of market capitalization as of February 2025) shows that only six companies have directors with AI backgrounds. We show this in Table 2. The fact that, all these companies are tech companies shows that probably most companies are in the reactive stage now.

Table 2: Companies Having Directors with AI Backgrounds

We also find a handful of companies using AI in their boardroom decision-making. Some pioneering organizations have integrated AI systems directly into their governance structures. Deep Knowledge Ventures appointed VITAL, an algorithm that analyzes biotech investment data to flag risks and identify promising opportunities5. Rakuten introduced a “Robo-Director” that processes market trends and business metrics to enhance strategic planning discussions6. Most notably, International Holding Company deployed “Aiden Insight” as a board observer that provides real-time analytics during meetings, recently identifying receivables management challenges and recommending operational efficiency improvements7. The Real Estate Institute of NSW similarly introduced “Alice Ing” as an AI advisor to the board to process market research and industry trends8.

Beyond these formal AI advisors, boards are implementing AI for specific governance tasks. IBM’s Watson has been leveraged by multiple boardrooms to run predictive scenarios on potential mergers and capital investments, providing data-grounded second opinions to complement management reports. Mitsubishi Corporation has piloted AI analytics to ensure board decisions align with long-term corporate strategy by simulating financial outcomes. In compliance monitoring, IHC’s AI observer actively tracks ethical parameters and governance frameworks, flagging potential compliance gaps. Financial sector boards utilize AI risk analytics to detect patterns of fraud, with insurance companies analyzing past incidents to improve controls and predict future vulnerabilities9.

To translate the AI Governance Maturity Matrix from concept into action, we’ve developed a practical self-assessment tool for boards. This diagnostic instrument allows directors to evaluate their current governance practices across each dimension and identify specific improvements to advance their maturity level. By completing this assessment annually, boards can track progress and prioritize governance enhancements that align with their strategic AI objectives.

This self-assessment tool provides boards with a practical way to evaluate their current AI governance maturity and identify specific actions for improvement. The boards can score each question on a scale of 1-3 (1=Reactive, 2=Proactive, 3=Transformative) to determine the board’s maturity level across the five dimensions.

How does AI feature in board-level strategic discussions?

☐ Discussed only when specific issues arise

☐ Regular agenda item with defined metrics

☐ Fully integrated into strategic planning and future scenarios

How does the board evaluate the strategic impact of AI initiatives?

☐ No formal evaluation process

☐ Periodic reviews of major AI projects

☐ Continuous assessment using AI-driven analytics for strategic decision-making

How are AI investments prioritized at the board level?

☐ Ad hoc approval of individual initiatives

☐ Alignment with established strategic priorities

☐ AI portfolio approach with risk-adjusted return metrics

What AI expertise exists within the board?

☐ Limited expertise, reliance on management explanations

☐ One or more directors with AI knowledge or dedicated advisory resources

☐ Diverse AI expertise including technical, ethical, and strategic dimensions

How does the board develop its collective AI competency?

☐ No formal development program

☐ Periodic training sessions and external expert presentations

☐ Ongoing education program with immersive experiences and industry partnerships

How does the board access specialized AI expertise when needed?

☐ Ad hoc external consultations

☐ Established relationships with expert advisors

☐ Standing technology committee with dedicated AI specialists

How frequently does the board receive AI performance metrics?

☐ Irregularly or only when issues arise

☐ Regular scheduled reports with standardized metrics

☐ Real-time dashboards with predictive indicators

How does the board monitor AI risks?

☐ Reactive approach to identified issues

☐ Regular risk assessments with defined thresholds

☐ Integrated risk monitoring with automated alerts and scenario modeling

How are AI insights incorporated into board decision-making?

☐ Minimal integration with traditional decision processes

☐ Dedicated analysis of AI-generated insights for major decisions

☐ AI-augmented decision frameworks for all strategic matters

How has the board defined ethical boundaries for AI applications?

☐ No formal ethical framework

☐ Documented ethical guidelines with compliance monitoring

☐ Comprehensive ethical framework with third-party validation and stakeholder input

How does the board ensure AI fairness and prevent bias?

☐ Limited oversight, issues addressed as they arise

☐ Regular audits of high-risk AI systems

☐ Continuous monitoring with established remediation protocols and transparent reporting

How does the board balance innovation with responsible AI use?

☐ No formal process for evaluation

☐ Stage-gated approval process with ethics review

☐ Integrated framework that accelerates ethical AI and restricts high-risk applications

How does the board foster cross-functional collaboration on AI?

☐ Limited interaction between board and AI teams

☐ Structured engagement between directors and AI leadership

☐ Integrated collaborative ecosystem including external partners and stakeholders

How open is the board to AI-driven insights that challenge conventional wisdom?

☐ Scepticism toward AI-generated recommendations

☐ Willingness to consider AI insights alongside traditional analysis

☐ Culture of data-driven decision-making where AI insights regularly influence direction

How does the board encourage responsible AI innovation?

☐ Limited involvement in innovation processes

☐ Defined innovation guidelines with ethical boundaries

☐ Active sponsorship of responsible AI initiatives with appropriate risk tolerance

For each dimension, the board can calculate the average score to gauge where it lies in the matrix:

For dimensions scoring below 2.0, the board should identify the three most important actions to advance maturity. It can assign clear ownership and timeframes for implementation, with quarterly board reviews to track progress. This self-assessment should be conducted annually, with results informing board education plans, committee structures, and strategic priorities for the coming year.

This self-assessment is designed to stimulate candid discussion among directors about AI governance gaps and opportunities. We recommend that boards complete this evaluation collectively during a dedicated session, possibly facilitated by the lead independent director or governance committee chair. The results should inform concrete action plans with assigned responsibilities and clear timelines for implementation.

Even well-intentioned boards encounter obstacles. One frequent pitfall can be an overreliance on external consultants, which will keep knowledge externally owned and hamper internal capacity building. Another is token AI oversight, where a board establishes an AI committee but lacks meaningful authority or integration with enterprise strategy. Finally, excessive short-term focus can overshadow the importance of building robust, ethically sound AI ecosystems that deliver value over time.

To circumvent these challenges, boards can:

In each case, the focus of the board should remain on embedding AI governance into the board’s core duties, not treating it as a side project.

The AI revolution demands new board governance capabilities, with real-world cases demonstrating both the perils of inadequate oversight and competitive advantages of strategic AI implementation. We hope that our AI Governance Maturity Matrix will provide a practical roadmap to progress from reactive firefighting to transformative leadership across five critical dimensions of board effectiveness. By applying the maturity framework, conducting regular self-assessments, and committing to specific improvements, boards can close the gap between technological innovation and responsible oversight while maximizing long-term value creation.

Spotlight

Sayan Chatterjee

Spotlight

Sayan Chatterjee

Spotlight

Mohammad Rajib Uddin et al.

Spotlight

Mohammad Rajib Uddin et al.