California Management Review

California Management Review is a premier academic management journal published at UC Berkeley

by Jessica Freeman-Wong, Daisy Munguia, and Jakki J. Mohr

Image Credit | Jaredd Craig

Not unlike the struggles many businesses have with leveraging new technologies, higher education, too, struggles to keep pace with technological change and innovation. Today, the challenge higher education faces is over embracing or banning ChatGPT.

“The Future Challenges of Business: Rethinking Management Education” by Paul J.H. Schoemaker. (Vol. 50/3) 2008.

“Artificial Intelligence in Human Resources Management: Challenges and a Path Forward” by Prasanna Tambe, Peter Cappelli, & Valery Yakubovich. (Vol. 61/4) 2019.

This past spring semester as students experimented with ChatGPT to assist with their coursework, some universities passed bans on the technology due to fears of cheating. Yet, the ability to police the use of ChatGPT and other generative AI tools is perhaps impractical. As of May 2023, ChatGPT had 1.8 billion users.1 Instead of fearing technology, educators must develop the capability to understand how to leverage the technology in their classrooms.

Here, we enumerate the concerns about ChatGPT in education as well as ways progressive educators are experimenting with it. We then briefly describe the evolution of education to successfully meet the needs of employers and argue that given the workplace of the future will be AI-enabled, educators must adopt that mindset as well. We then apply insights from how businesses effectively harness emerging technology to develop a strategy for educators to effectively deploy ChatGPT in higher education.

Generative AI (artificial intelligence) is the umbrella term for a type of AI, trained on vast amounts of data from the internet, that statistically predicts the next most likely sequence of words. One of the most advanced and well-known examples is ChatGPT developed by OpenAI. Referred to as a type of large language model (LLM), ChatGPT automatically generate responses to “prompts,” or natural language inquiries, that users type in to the program, allowing it to generate text that is seemingly coherent and relevant to a given topic—albeit perhaps inaccurate. Other types of generative AI can produce images, speech, and even music.

Given its power, ChatGPT offers vast potential in education, including writing essays, solving math problems, and writing computer code. Yet, administrators and professors struggle to embrace it for many reasons:

Concerns about plagiarism and cheating. Students can use ChatGPT to write essays, answer quizzes, and more. Although efforts to minimize and detect student cheating is not new, using techniques to detect AI writing requires new approaches. Some detection software claims it is able determine whether the assignment was written with ChatGPT with a high degree of accuracy.2

Possible inaccurate information. When asked to provide the square root of 423,894, ChatGPT confidently gives the wrong answer.3 Instead of 651.07 (the correct answer), it provides 650.63. In another situation, a professor asked ChatGPT about a made-up term: “cycloidal inverted electromagnon;” ChatGPT wrote a plausible result that was totally bogus–as were the citations to non-existing literature.4

Perpetuate biases. Based on the way the algorithm works, ChatGPT can inadvertently perpetuate biases that were present in the training data on which it was created. For example, when asked about the history of the printing press, ChatGPT generates a U.S.-centric essay that includes no information about printing presses in Europe or Asia.5

Student privacy and data security. ChatGPT is elusive about its data privacy policy and can both access any information fed into the platform as well as share data entered into the model with third-parties.6 ChatGPT collects IP addresses and location information of users, as well as any personal information typed into the chats. Educators and students alike are often unaware of these risks.

Diminished collaboration and social engagement. Some worry that with a heavy reliance on AI in the classroom can lead to diminished social skills, undermining collaboration and human emotional connection that is a key aspect of in-person learning.7

Exacerbate the digital divide. The digital divide refers to inequalities between students who have ready access to technology and those who don’t, whether due to geography or socioeconomic status. To the extent that universities develop a strategy to effectively harness ChatGPT to facilitate student learning (as discussed below), concerns are that increased reliance on technology such as ChatGPT could perpetuate existing educational inequalities.

A large concern in using ChatGPT is with student learning and comprehension of the topics they are studying. Many disciplines require basic knowledge acquisition in order to develop proficiency (say, anatomy and physiology). And, despite the ready availability of facts from their smart-phones, students still need to be innately familiar with the concepts in their discipline. Some say that these concerns about student learning by relying on ChatGPT are similar to concerns about the calculator—which now is embraced as a standard educational tool.

Perhaps the largest concern is how ChatGPT might undermine student development of critical thinking and problem-solving skills.8 If students come to rely too heavily on generative AI tools to develop their work, it could undermine not only their ability to develop their own understanding of the topic of study, but could also hinder their abilities to think independently and to develop problem solving skills.

Despite these concerns, tech-savvy, early adopter educators have found intriguing ways to leverage generative AI in the classroom, as the following examples illustrate.

Learn about misinformation and bias: While misinformation and bias are primary controversies around generative AI, creative teachers are using it to help students evaluate information. For example, students can learn to critique ChatGPT responses for misinformation and bias. Take the prior example of the U.S.-centric answer about the history of the printing press. By pointing out the problem, the teacher led an in-depth discussion on bias. One user calls this assignment “grade the bot.”9

Strengthen arguments: Another use of ChatGPT is as a “debate partner” of sorts, allowing students to think through opposing views to strengthen their own arguments.10 Students can also critique the responses ChatGPT generates, to identify weaknesses or even to fact-check it.11

Provide real-time feedback and assessment: Students can paste their written work into ChatGPT and ask how it can improve their writing.12 Some professors encourage students to use it to smooth out awkward first drafts.13

Customize learning: ChatGPT can customize learning plans for different learning styles. Quizlet, an education technology company, created a feature called Q-Chat using ChatGPT; its app adjusts the difficulty of questions based on a student’s learning style and how well they know the material they’re being quizzed on.

Outside the classroom, professors are using ChatGPT to help them craft learning objectives, generate wrong answers for multiple-choice tests, and more.

Certainly, these uses represent important initial efforts. At the same time, one-off pilots and experiments are not a viable strategy for technology utilization. Learning to leverage AI is a vital skill for the workforce of the future, particularly with regards to how it can augment human capabilities.14 Hence, the interplay of the education system relative to employment needs offers an important perspective on the role of ChatGPT in education.

Since its inception, public education in the U.S. has been influenced by the needs of employers. Even in the 1800s, classrooms were designed, in part, to prepare students for the industrial age; factory owners wanted workers who would arrive on time, do what they were told, and work long hours. Hence, the schooling model of sitting in a classroom all day and mastering age-based curriculum was designed to fulfill these factory owners’ needs.15

In the fifties, business education was “more akin to vocational training than to science,” and criticized for its lack of rigor and scholarly merit.2Although changes were made in response to the criticism, those changes were focused more on analytical skills than on “the messy ambiguities of the real world.” More recently, the rise of big data and data analytics spurred another change, leading to a proliferation of programs developing student skills that would meet employer demands in those areas.

Now, the education system must evolve again, this time to address how to usefully incorporate powerful tools like ChatGPT in learning,17 while ensuring that its use does not detract from the skills and capabilities required for graduates to effectively navigate the volatile, uncertain challenges facing humanity.

We argue that a useful perspective, to inform this challenge and to craft solutions, can be found in understanding how businesses craft strategies for their own successful adoption of emerging technology. Hence, here we build on that idea to inform how educational institutions can learn from business to develop a cohesive strategy for ChatGPT and other generative AI tools.

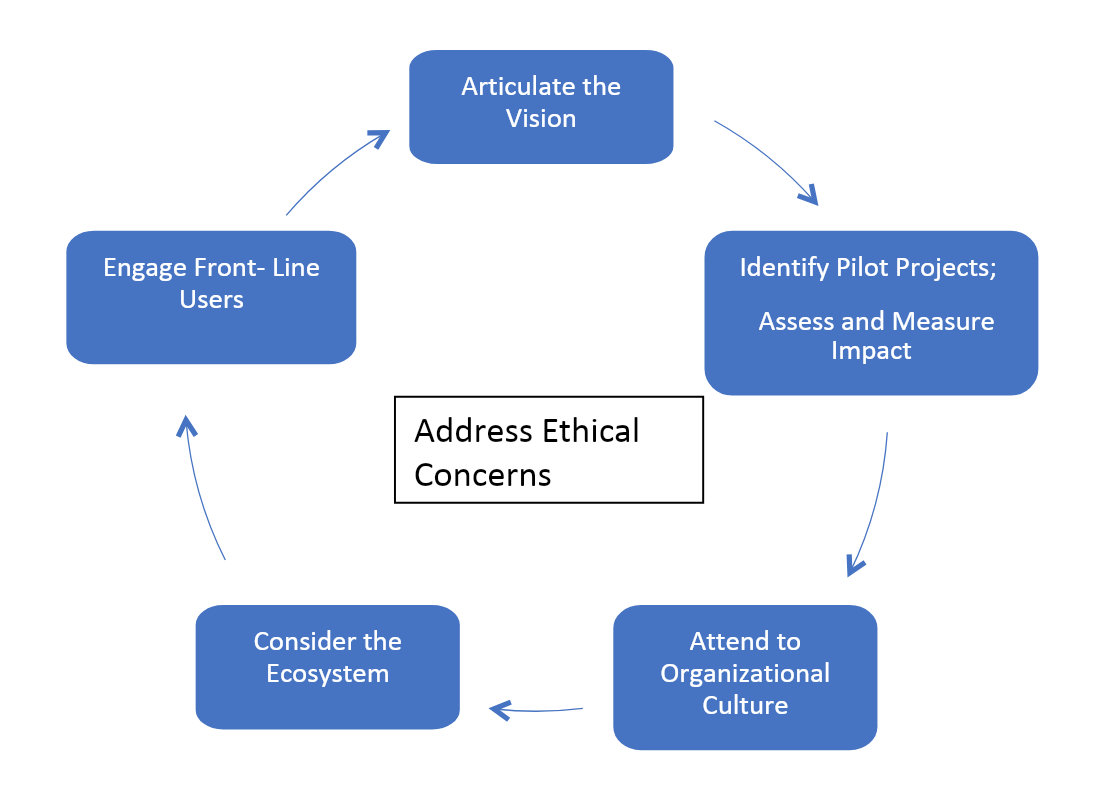

Just as businesses develop a strategy to harness emerging technologies, so, too, should education systems. We integrate best-practices across multiple resources18 in how companies develop an AI strategy to develop such a strategy for higher education (see Figure).

First, educational leaders must articulate a clear vision regarding why educators should embrace rather than ban generative AI tools such as ChatGPT. Business leaders know that early attention to a vision that rallies the team around embracing new technologies plays an outsized role in whether and how employees embrace the new technology. In particular, effective visioning can highlight opportunities to use AI to better equip students for future careers, identify the risks to education if it does not embrace AI, or even state the existential crisis that can arise if educators are unable to keep pace with emerging technology.

Second, much like the role of pilot projects to test-and-learn as companies leverage new technologies, educational leaders can initiate pilot programs in selected programs to systematically explore the potential and pitfalls of ChatGPT. These pilots can help identify best practices, evaluate challenges, and refine implementation strategies. Insights gained from carefully selected pilot programs can provide guidance on where and how AI integration offers value. The assessment regarding the impact of AI on student learning and key outcomes can inform the value proposition and ideas on scaling its adoption across the education system. Based on these pilot projects, institutions can then develop a strategy to scale its use across the curriculum and more.

Third, as in business, fostering a culture of innovation is key to the institutional ability to experiment and embrace possible opportunities to use generative AI across the curriculum. Culture, the values and beliefs that are shared across the institution, is shaped by leaders’ behaviors and communication–and is reinforced by processes and reward systems. Research implicates culture as the most significant issue in organizational adoption of technology. Developing an agile, experimental, adaptable mindset is part-and-parcel of a culture of innovation, and is more important than ever in education today.19

Fourth, to diffuse generative AI in education requires being mindful of the ecosystem in which it will be used, including accreditation systems, grading and testing systems, teacher evaluations, and more. Fortunately, the COVID-19 pandemic resulted in a renewed effort for universities to leverage technology for learning. Likewise, adequate funding and resources must be allocated to support AI in education, including investments in infrastructure, AI tools and technologies, and training (below). Partnerships with government agencies, industry leaders, and technology companies can facilitate knowledge sharing, research collaborations, and the development of AI tools tailored for education.

Fifth, as in business, training for the front-line users is key. Enhancing teachers’ AI competencies by providing them with the necessary understanding of AI and its potential applications in education is vital. Professional development should cover topics like prompt engineering, sources of bias and misinformation, and more. Support should be extended to developing AI-focused curricula and instructional resources, enabling teachers to integrate AI into their teaching practices effectively. Continuous and ongoing support, technical assistance, and monitoring are required for effective implementation. Help desks, professional learning communities, and feedback mechanisms can help share best practices. For example, sharing ways ChatGPT can save time in preparing course materials, assisting in grading and providing immediate feedback to students, and enabling educators to create customized lesson plans for students with diverse needs can alleviate concerns that some educators may have.

Throughout the process, leaders must be mindful of data privacy, security, and ethical considerations. For example, equitable access to AI tools and resources is required to ensure that all students may benefit from AI-enabled education. Moreover, emerging technologies are rife with ethical quandaries and unintended consequences;20effectively addressing these concerns requires proactive identification not just of the ethical issues that arise but also how to strategically manage those issues.

Some argue that to the extent that ChatGPT is a disruptive force in education, wholesale changes in the very structure and design of the institution may be required. However, given the institutional factors that make such wholesale change difficult, we believe that this strategic approach offers a viable path forward. By adopting a strategic approach to ChatGPT, educational leaders can foster a system that empowers educators in order to enhance teaching and learning experiences, and to prepare students for the future.

The introduction of ChatGPT, an AI-powered chatbot, has sparked discussions and concerns about its potential impact on education. Rather than viewing AI as a threat to education, educators instead should proactively develop a strategy to productively harness it. Borrowing from business regarding strategic adoption of emerging technology is a logical approach. Embracing technology is required to ensure the education system remains relevant and effective in preparing students for the demands of the modern world.

Ruby, Daniel (2023). “57+ ChatGPT Statistics for 2023 (New Data + GPT-4 Facts).” Demand Sage, May 18, Demand Sage.

McMurtrie, Beth (2023), “ChatGPT Is Everywhere: Love it or hate it, academics can’t ignore the already pervasive technology,” Chronicle of Higher Education, March 6, Chronicle of Higher Education.

Ansari, Tasmia (2022). “Freaky ChatGPT Fails That Caught Our Eyes.” Analytics India Magazine, December 7, Analytics India Magazine.

Wingard, Jason (2023), “ChatGPT: A Threat to Higher Education?” Forbes, January 10, Forbes.

Haven, Will Douglas (2023). “ChatGPT: Change, Not Destroy, Education.” MIT Technology Review, April 6, MIT Technology Review.

Pal, Anagh (2023). “Data you share with ChatGPT is not private: So be careful with what you reveal.” Business Insider India, March 31. Business Insider.

Shonubi, Olufemi (2023) “AI In The Classroom: Pros, Cons And the Role of EdTech Companies.” Forbes, February 21. Forbes.

Alphonso, Geoffrey (2023). “Generative AI: Education in the Age of Innovation.” Forbes, May 3, Forbes.

Miller, Matt (2022). “Ditch That Textbook - Artificial Intelligence in Education.” Ditch That Textbook, December 17,Ditch That Textbook.

Roose, Kevin (2023). “AI Chatbots like ChatGPT Are Transforming Schools and Supporting Teachers.” The New York Times, January 12, NY Times.

Wingard, op cite.

Miller, Matt. op cit.

McMurtrie, op cit.

Davenport, Thomas and Steven Miller (2022), Working with AI: Real Stories of Human-Machine Collaboration, the MIT Press. Penn, Christopher (2021), “Preparing your Career for AI,” in AI for Marketers: An Introduction & Primer, Third Edition, pp. 166-194.

Schrager, Allison (2018), “The Modern Education System was Designed to teach future factory workers to be ‘punctual, docile, and sober,’” Quartz.com, June 19, Quartz.

Schoemaker, Paul JH. “The future challenges of business: Rethinking management education.” California Management Review 50, no. 3 (2008): 119-139.

Scott, Inara (2023), “Yes, We are in a (ChatGPT) Crisis,” Inside Higher Ed, April 18, Inside Higher Ed.

Fountaine, T., McCarthy, B., & Saleh, T. (2019). Building the AI-Powered Organization. Technology isn’t the biggest challenge, Culture is. Harvard Business Review, 97(4), 62-+; McKinsey.com (2022), The data-driven enterprise of 2025. McKinsey; Ransbotham, Sam, Francois Candelon, David Kiron, Burt LaFountain, and Shervin Khodabandeh (2021), “The Cultural Benefits of Artificial Intelligence in the Enterprise,” MIT Sloan Management Review and Boston Consulting Group, November 2021; Oliver Fleming, Tim Fountaine, Nicolaus Henke, and Tamim Saleh (2018), “Ten Red Flags Signaling your Analytics Program Will Fail,” McKinsey.com (May 14). McKinsey; Chiu, Michael, “Generative AI Is Here: How Tools Like ChatGPT Could Change Your Business.” McKinsey & Company, 20 Dec. 2022, McKinsey. Philip Budden and Fiona Murray (2022), Strategically Engaging With Innovation Ecosystems, MIT Sloan Management Review, July 20; Lang, Nikolau, Konrad von Szczepanski, and Charline Wurzer (2019), “The Emerging Art of Ecosystem Management,” Boston Consulting Group; Ransbotham, Sam, Francois Candelon, David Kiron, Burt LaFountain, and Shervin Khodabandeh (2021), “The Cultural Benefits of Artificial Intelligence in the Enterprise,” MIT Sloan Management Review and Boston Consulting Group, November 2021; Knight, Rebecca (2015), “Convincing Skeptical Employees to Adopt New Technology, Harvard Business Review (March).

Wagner, Tony. Most Likely to Succeed: Preparing Our Kids for the Innovation Era. New York, NY: Scribner, 2015.

Blackman, Reid, (2023), “How to Avoid the Ethnical Nightmares of Emerging Technology,” Harvard Business Review, May 9.