California Management Review

California Management Review is a premier professional management journal for practitioners published at UC Berkeley Haas School of Business.

Ch. Mahmood Anwar

Image Credit | Louis Deconinck

The digital revolution has brought us to a time where artificial intelligence (AI) is now a part of our everyday organizational life and managerial decision-making relies on it. AI is helping with things like speeding up recruitment processes and predicting future market trends. This highlights AI’s crucial role in improving how we work by making tasks more effective and efficient. However, along with AI’s power, there is a vital need to focus on ethics and fairness. As AI becomes more widespread, managers face challenges related to bias, transparency about how AI functions, and its impact on society. It is crucial for managers to be aware of these important issues while using AI in their work, especially in decision-making (Williamson & Prybutok, 2024).

Peter Cappelli, Prasanna Tambe, Valery Yakubovich, “Artificial Intelligence in Human Resources Management: Challenges And a Path Forward,” California Management Review, 61/4 (2019): 15–42.

Vegard Kolbjørnsrud, “Designing the Intelligent Organization: Six Principles For Human-AI Collaboration,” California Management Review, 66/2 (2023): 44–64.

One of the significant ethical issues we are addressing today is algorithmic bias. AI systems learn from historical data, which means they can continue and even worsen existing societal biases. This can result in unfair treatment of people. For example, recruitment algorithms based on past hiring practices might unintentionally exclude qualified candidates from minority groups (Dastin, 2018). To prevent this, it is crucial to take active steps to review and improve AI systems to ensure they are fair and ethical. According to Wachter et al. (2021), the problem of algorithmic fairness is not just about technology; it is also deeply connected to politics and society. Therefore, solving this issue needs a broad approach that includes different people and groups.

Many AI systems work like ‘black boxes,’ which means people cannot see what happens inside. This makes it hard to know how they make choices, raising concerns about clarity and responsibility. Explainable AI (XAI) is very important because it helps build trust, allowing managers to understand why AI makes certain decisions. According to Miller (2019), explaining things is a big part of how humans think and understand. It is important to show why and how AI does what it does to help humans. Mittelstadt (2019) points out that when we talk about the ethics of AI, it is not only about what AI can do technically, but also about what it should do ethically. This means it is important to make sure that how we develop AI is in line with what society values and believes is right. Managers should ask AI sellers for XAI features and make sure they learn how to understand and use these explanations correctly.

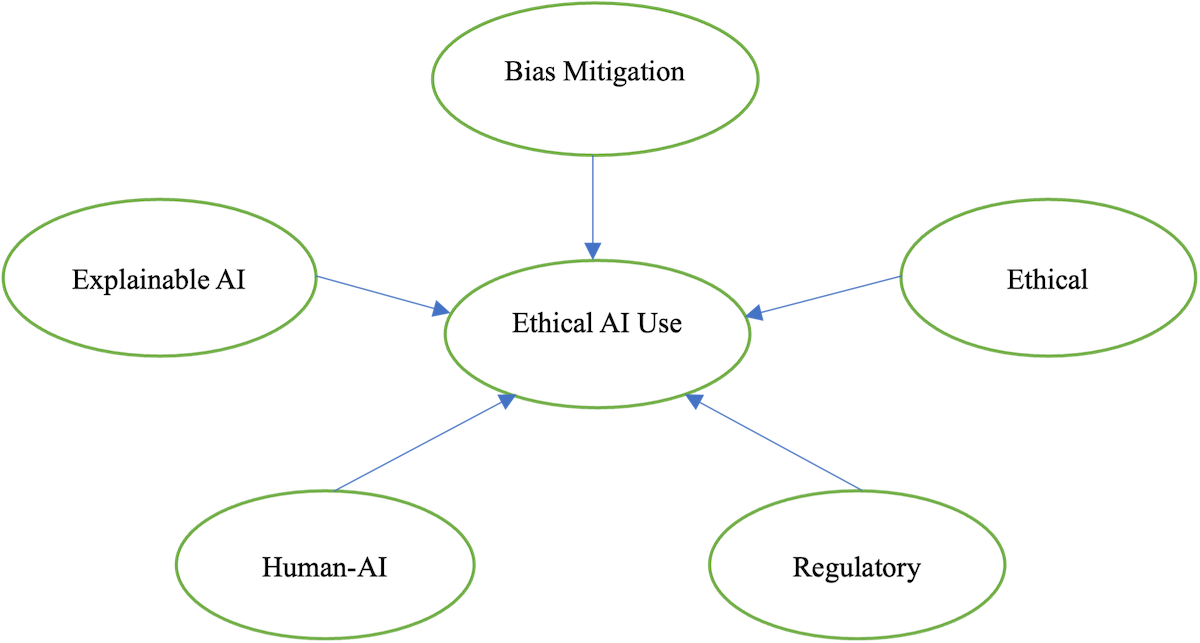

Below, I shall present important aspects that should be considered by managers to ensure ethical use of AI (see Figure 1):

AI is increasingly being used in hiring, evaluating work performance, and understanding different customer groups. While this offers many advantages, it is important to first address any biases that might be present in these systems. To tackle these biases, we must carefully examine the data we are using. Mehrabi et al. (2021) point out that bias can enter at any stage of data handling, so regular checks are essential to ensure fair representation of different groups. In hiring, this means analyzing the training data to avoid historical biases against certain demographics. For job performance evaluations, it is crucial to gather feedback from diverse sources to prevent reliance on a limited, possibly biased dataset. For understanding customer segments, we need to be cautious about which features we use. For instance, using a zip code can inadvertently introduce socioeconomic biases. To make AI fairer, we can use ‘adversarial debiasing’ techniques, which train models to ignore sensitive attributes like race or gender, as suggested by Madras et al (2018). Additionally, being transparent about data sources and the reasons behind our decisions helps us improve continuously. Regular feedback from stakeholders involved is vital to spot any new biases and ensure AI systems reflect the ethical values of the organization.

Explainable AI (XAI) goes beyond just being clear; it is about building trust and helping managers make well-informed decisions. To effectively use Explainable AI (XAI), you need a strategic plan. First, you should figure out why you need explanations. Is it for meeting regulations, earning user trust, or fixing problems with the model? Understanding this helps you decide how detailed the explanations need to be. As Molnar (2020) mentions, “interpretability is not a single concept,” and choosing the right XAI method depends on your specific need. Next, focus on using model-agnostic methods like Local Interpretable Model-agnostic Explanations (LIME) or SHapley Additive exPlanations (SHAP) because they are versatile and work well with complex models that are hard to interpret. However, if you are working with models like decision trees, which are already easier to understand, use their natural clarity to your advantage. Also, it is important to design ‘explanation interfaces’ that suit the level of expertise of the user. According to Ribeiro et al. (2016), visualizing local explanations and highlighting important features can greatly help users grasp specific scenarios. Another important step is establishing a feedback loop. Regularly ask users if the explanations are clear and useful, and make improvements based on their feedback. Additionally, make sure to integrate XAI into every stage of the AI workflow, from data preparation to the deployment of models. This practice is crucial for regularly checking for biases and for taking necessary actions early on. Finally, it is essential to document the XAI processes in detail, including the methods used and their limits. This documentation supports accountability and makes it easier for others to replicate your approach. By following these steps, organizations can turn XAI from just an idea into a practical tool that helps build AI systems people can trust and understand.

Traditional ethical frameworks are important foundation for business ethics, but they need to be adapted for managing AI. Utilitarianism, which aims to make the most people happy, struggles with AI because AI can have unexpected effects, and it is difficult to measure happiness in complex systems. Researchers like Mittelstadt et al. (2016) point out that AI often spreads responsibility among many agents, making it difficult to determine who is accountable for outcomes. Deontology, which focuses on following rules and duties, also faces challenges in setting universal rules for AI. This is because technology changes quickly, and cultural contexts differ widely. Therefore, instead of sticking strictly to one ethical approach, a combination of ideas is needed. For example, a utilitarian approach could help in designing an AI system initially, while deontological principles could ensure compliance with data privacy regulations. Moreover, virtue ethics, which emphasizes good character and moral behavior, becomes increasingly important in creating an ethical culture around AI in organizations. Vallor (2016) emphasizes that cultivating ‘technomoral virtues’ such as transparency, accountability, and fairness is essential for responsible AI management. This involves integrating ethical considerations into every stage of AI development and deployment, promoting a thoughtful and proactive approach to handling the unique moral dilemmas that AI presents.

Figure 1: Important Aspects of Ethical AI Use

To work well with AI, managers need to change their role from just giving orders to being partners with AI. It is important to use AI for tasks like analyzing data, finding patterns, and making predictions. Managers should focus on human skills such as empathy, creativity, and making ethical choices. As Rahwan (2018) states, AI should be a partner, not a rival, allowing managers to concentrate on important organizational decisions. Building a strong understanding between humans and AI is a key. This means training managers to know what AI is good at, what it is not, how to ethically interpret AI results, and how to provide feedback to improve AI. Natarajan et al. (2023) emphasize designing systems where both humans and AI can start and manage tasks together in a flexible manner. Making AI processes easy to understand helps building trust and smooth collaboration. Managers must be able to question AI’s advice to maintain critical oversights. Setting up clear communication methods and feedback systems ensures easy information flow and continuous learning. By creating a respectful and understanding working culture, organizations can truly benefit from the collaboration between humans and AI.

To effectively collaborate with AI, managers must transition from a traditional command-and-control approach to one that emphasizes partnership and cooperation. The rules surrounding AI ethics are changing quickly, so organizations must stay proactive in following them. There is a noticeable shift from simply having voluntary ethical guidelines to establishing strict laws, especially regarding data privacy and the fairness of algorithms. As mentioned by Yeung (2018), ‘regulatory innovation’ is necessary to match the fast-paced development of AI. One important step is to create an internal AI ethics board made up of legal, technical, and ethical specialists. This board’s role is to stay updated on regulatory changes and check for any potential risks. Regular AI audits should be conducted by organizations, focusing on the sources of data, the transparency of algorithms, and the detection of biases. This is in line with recommendations from the EU’s proposed AI Act (European Commission, 2021). Implementing strong data governance policies is essential, highlighting the use of minimal data and having clear reasons for its use, which is important for following GDPR and similar regulations. Additionally, putting ‘human-in-the-loop’ systems in place is crucial, as it ensures that human oversight and responsibility are maintained in important AI-related decisions. Engaging with regulators and taking part in industry conversations about AI ethics can offer valuable insights into new best practices. Finally, it is important to document all processes related to AI development and implementation, including risk assessments and ways to handle potential issues, as this is key for proving compliance and building trust with stakeholders.

All the above aspects have been intelligently tabulated in Table 1.

| Feature | AI Bias Mitigation | Explainable AI (XAI) | Ethical Frameworks | Human-AI Collaboration | Regulatory Compliance |

|---|---|---|---|---|---|

| Definition | Techniques and processes for reducing and eliminating unfair biases in AI systems. | Methods to make AI decision-making processes transparent and understandable to humans. | Guiding principles and values for the ethical development and deployment of AI. | Strategies for effectively combining human and AI capabilities to enhance decision-making and outcomes. | Adherence to laws, regulations, and standards governing the development and use of AI. |

| Key Goals | Ensure fairness and equity in AI outcomes. Prevent discriminatory or harmful impacts. Promote inclusivity. |

Increase transparency and trust in AI. Enable accountability for AI decisions. Facilitate debugging and improvement of AI models. |

Establish ethical guidelines for AI development. Promote responsible AI practices. Address societal impacts of AI. |

Optimize the synergy between human and AI intelligence. Enable effective human oversight and control. Improve decision quality and efficiency. |

Meet legal and regulatory requirements. Minimize legal and financial risks. Ensure compliance with industry standards. |

| Key Practices | Data preprocessing and augmentation. Algorithmic bias detection and correction. Fairness metrics and evaluation. |

Model interpretation techniques (LIME, SHAP). Visualization of AI decision processes. Providing rationale and explanations for AI outputs. |

Developing ethical codes of conduct. Conducting ethical impact assessments. Implementing ethical governance structures. |

Human in loop systems. Collaborative AI design and development. Tools for human-AI interaction. |

Staying informed about relevant regulations (e.g., EU AI Act). Implementing compliance monitoring systems. Documenting AI systems and processes. |

| Importance | Crucial for building trust and ensuring AI systems are used responsibly and ethically. | Essential for accountability, transparency, and building user trust in AI. | Fundamental for guiding the ethical development and deployment of AI in society. | Vital for maximizing the benefits of AI while maintaining human oversight and control. | Necessary for mitigating legal risks and ensuring responsible AI implementation. |

| Examples of application | Fairness in loan approval algorithms. Bias detection in facial recognition systems. Equal representation in AI training data. |

Explaining credit risk scores. Providing reasons for medical diagnoses. Showing how AI makes hiring decisions. |

AI ethics guidelines in healthcare. Responsible AI in autonomous vehicles. Ethical data usage in marketing. |

AI-assisted medical diagnosis with human review. Collaborative robots in manufacturing. Human oversight in AI-driven financial trading. |

Compliance with data privacy regulations (GDPR). Adherence to industry-specific AI standards. Reporting AI system information to regulatory bodies. |

Table 1: Key Aspects of Ethical AI Use

To develop artificial intelligence (AI) responsibly, we need a simple and clear plan that focuses on fairness, openness, ethics, collaboration, and following rules. These parts are not separate; they work together to ensure AI is used the right way. It is important to tackle bias, making AI systems easy to understand, base AI development on ethical principles, build strong partnerships between humans and AI, and keep up with new regulations. Doing these things helps us deal with AI’s complexities. This well-planned approach is not just about safety; it is absolutely necessary for building trust, getting the most benefits from AI, and ensuring it has a positive and fair impact on society. The journey to ethical AI does not stop; it requires us to always pay attention and adapt as needed. The aim is to make sure technology helps people in a fair and sustainable way, now and in the future.

Spotlight

Sayan Chatterjee

Spotlight

Sayan Chatterjee

Spotlight

Mohammad Rajib Uddin et al.

Spotlight

Mohammad Rajib Uddin et al.